Are you continue to on the fence about whether or not generative synthetic intelligence can do the work of human attorneys? If that’s the case, I urge you to learn this new research.

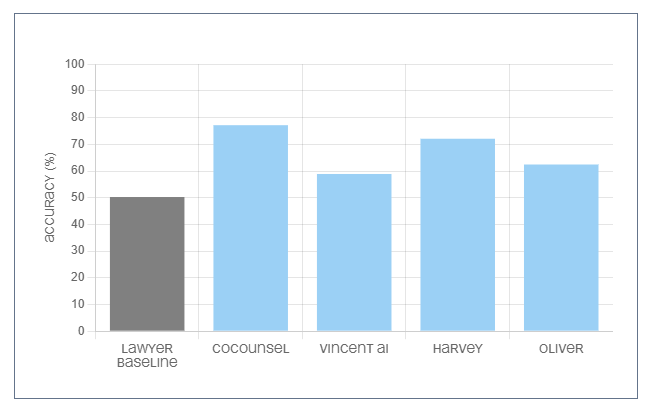

Printed yesterday, this first-of-its-kind research evaluated the efficiency of 4 authorized AI instruments throughout seven core authorized duties. In lots of circumstances, it discovered, AI instruments can carry out at or above the extent of human attorneys, whereas providing considerably quicker response instances.

The Vals Authorized AI Report (VLAIR) represents the primary systematic try to independently benchmark authorized AI instruments towards a lawyer management group, utilizing real-world duties derived from Am Regulation 100 corporations.

It evaluated AI instruments from 4 distributors — Harvey, Thomson Reuters (CoCounsel), vLex (Vincent AI), and Vecflow (Oliver) — on duties together with doc extraction, doc Q&A, summarization, redlining, transcript evaluation, chronology technology, and EDGAR analysis.

LexisNexis initially participated within the benchmarking however, after the report was written, it selected to withdraw from all of the duties during which it participated besides authorized analysis. The outcomes of the authorized analysis benchmarking shall be printed in a separate report.

Key Findings

Harvey Assistant emerged because the standout performer, reaching the best scores in 5 of the six duties it participated in, together with a formidable 94.8% accuracy price for doc Q&A. Harvey exceeded lawyer efficiency in 4 duties and matched the baseline in chronology technology.

(Every vendor may select which of the evaluated expertise they wished to choose into.)

“Harvey’s platform leverages fashions to offer high-quality, dependable help for authorized professionals,” the report stated. “Harvey attracts upon a number of LLMs and different fashions, together with customized fine-tuned fashions educated on authorized processes and information in partnership with OpenAI, with every question of the system involving between 30 and 1,500 mannequin calls.”

CoCounsel from Thomson Reuters was the one different vendor whose AI software obtained a high rating — 77.2% for doc summarization — and constantly ranked amongst top-performing instruments throughout all 4 duties it participated in, with scores starting from 73.2% to 89.6%.

The Lawyer Baseline (the outcomes produced by a lawyer management group) outperformed the AI instruments on two duties — EDGAR analysis (70.1%) and redlining (79.7%), suggesting these areas might stay, for now a minimum of, higher suited to be completed by people. AI instruments collectively surpassed the Lawyer Baseline on doc evaluation, info retrieval and information extraction duties.

Maybe not surprisingly, the research discovered a dramatic distinction in response instances between AI and people. The report discovered that AI instruments have been “six instances quicker than the attorneys on the lowest finish, and 80 instances quicker on the highest finish,” making a powerful case for AI instruments as effectivity drivers in authorized workflows.

“The generative AI-based programs present solutions so shortly that they are often helpful beginning factors for attorneys to start their work extra effectively,” the report concluded.

Doc Q&A produced the best scores out of any activity within the research, main the report back to conclude that it’s a activity for which attorneys ought to discover worth in utilizing generative AI.

The report discovered that Harvey Assistant was constantly the quickest, with CoCounsel additionally being “terribly fast,” each offering responses in lower than a minute.

But it surely additionally stated that Vincent AI “gave responses exceptionally shortly as typically one of many quickest merchandise we evaluated.”

Oliver was discovered to be the slowest, usually taking 5 minutes or extra per question. The report stated that is doubtless on account of Oliver’s agentic workflow, which breaks duties into a number of steps.

Vendor-Particular Efficiency

Harvey, the fastest-growing authorized expertise startup within the house (having raised over $200 million and achieved unicorn standing since its founding in 2022), opted into extra duties than another vendor and obtained the best scores in doc Q&A, doc extraction, redlining, transcript evaluation, and chronology technology.

“Harvey Assistant both matched or outperformed the Lawyer Baseline in 5 duties and it outperformed the opposite AI instruments in 4 duties evaluated,” the report stated. “Harvey Assistant additionally obtained two of the three highest scores throughout all duties evaluated within the research, for Doc Q&A (94.8%) and Chronology Era (80.2% — matching the Lawyer Baseline).”

CoCounsel 2.0 from Thomson Reuters was submitted for 4 of the duties and constantly carried out properly, the research discovered, reaching a median rating of 79.5% throughout its 4 evaluated duties — the best common rating within the research. It notably excelled at doc Q&A (89.6%) and doc summarization (77.2%).

“CoCounsel surpassed the Lawyer Baseline in these 4 duties alone by greater than 10 factors,” the research stated.

For doc summarization, all of the gen AI instruments carried out higher than the Lawyer Baseline.

Vincent AI from vLex participated in six duties — second solely to Harvey in variety of duties — with scores starting from 53.6% to 72.7%, outperforming the Lawyer Baseline on doc Q&A, doc summarization, and transcript evaluation.

The report stated that Vincent AI’s design is especially noteworthy for its capability to deduce the suitable subskill to execute primarily based on the consumer’s query, and that the solutions it offered have been “impressively thorough.”

Oddly (I assumed), the report praised Vincent AI for refusing to reply questions when it didn’t have adequate information to reply, somewhat than give a hallucinated reply. However the report stated these refusals to reply additionally negatively affected its scores.

Oliver, launched final September from the startup Vecflow, was described within the report as “the best-performing AI software” on the difficult EDGAR analysis activity. That would appear a given, because it was the one AI software to take part in that activity. It scored 55.2% towards the Lawyer Baseline’s 70.1%.

The report highlighted Oliver’s “agentic workflow” strategy as doubtlessly useful for advanced analysis duties requiring a number of steps and iterative decision-making, and stated it excels at explaining its reasoning and actions as it really works.

“Oliver bested a minimum of one different product for each activity it opted into,” the report stated. “Oliver additionally outperformed the Lawyer Baseline for Doc Q&A and Doc Summarization.”

Methodology

The research was developed in partnership with Legaltech Hub and a consortium of legislation corporations together with Reed Smith, Fisher Phillips, McDermott Will & Emery, and Ogletree Deakins, together with 4 nameless corporations. The consortium created a dataset of over 500 samples reflecting real-world authorized duties.

Vals AI developed an automatic analysis framework to offer constant evaluation throughout duties. The research notes that the lawyer management group was “blind” — collaborating attorneys have been unaware they have been a part of a benchmarking research and obtained assignments formatted as typical consumer requests.

Tara Waters was Vals AI’s challenge lead for the research.

Future Instructions

The report signifies this benchmark is the primary iteration of what its says shall be an everyday analysis of authorized trade AI instruments, with plans to repeat this research yearly and add others. Future iterations might broaden to incorporate extra distributors, extra duties, and protection of worldwide jurisdictions past the present U.S. focus.

“There may be rising momentum throughout the authorized trade for standardized methodologies, benchmarking, and a shared language for evaluating AI instruments,” the report notes.

Nicola Shaver and Jeroen Plink of Legaltech Hub have been credited for his or her “partnership in conceptualizing and designing the research and bringing collectively a high-quality cohort of distributors and legislation corporations.”

“Total, this research’s outcomes assist the conclusion that these authorized AI instruments have worth for attorneys and legislation corporations,” the research concludes, “though there stays room for enchancment in each how we consider these instruments and their efficiency.”